Depth-Aware Scoring and Hierarchical Alignment for Multiple Object Tracking

The paper 'Depth-Aware Scoring and Hierarchical Alignment for Multiple Object Tracking' by Milad Khanchi, Maria Amer, and Charalambos Poullis has been accepted for publication in IEEE International Conference on Image Processing (ICIP) 2025.

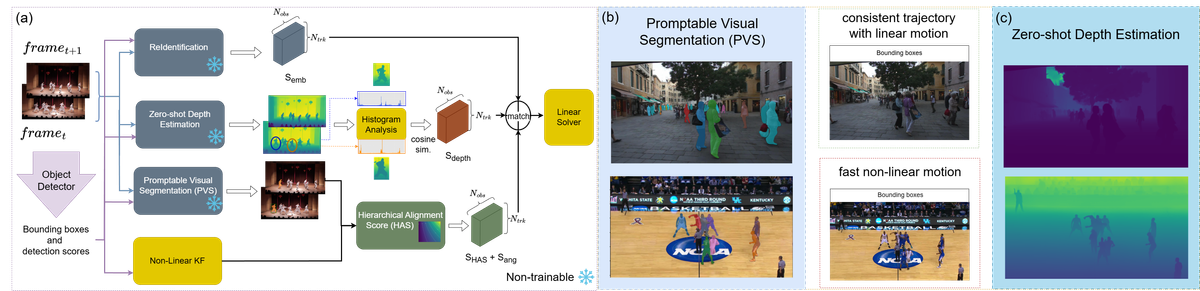

TL;DR: DepthMOT is a cutting-edge multiple object tracking framework that enhances object association by integrating monocular depth estimation—used in a zero-shot, training-free manner—as a standalone feature. It introduces a Hierarchical Alignment Score that combines coarse bounding box overlap with fine-grained pixel-level alignment for more accurate tracking, especially in scenes with occlusion or similar-looking objects. DepthMOT achieves state-of-the-art results on challenging benchmarks without any training or fine-tuning.

Research paper: https://arxiv.org/abs/2506.00774

Source code: https://github.com/Milad-Khanchi/DepthMOT